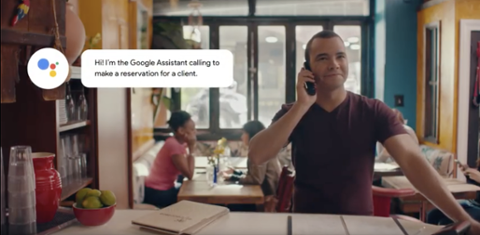

Do consumers trust artificial intelligence (A.I.)? That’s a key question confronting tech professionals who code A.I. and machine-learning applications. Without that trust, users will refuse to interact with these new apps, potentially strangling the nascent category of consumer A.I. Clearlink recently surveyed 1,000 people to figure out how they felt about Google Duplex, an A.I. platform that will engage on the phone with customer-service representatives (its main use case, at least at the moment, is making restaurant reservations). As demonstrated at Google’s recent I/O conference, Duplex replicates human speech, even pausing every few seconds to say “um,” and doesn’t announce that it’s software as opposed to an actual human being. Clearlink asked survey-takers if they viewed that as ethical. Some 35.3 percent of respondents felt that Duplex was “unethical,” versus 64.7 percent who did not. “Participants’ initial lack of concern could have something to do with the existing prevalence of automated phone systems,” read Clearlink’s note accompanying the data. “Most people have interacted with a computer when contacting a large organization for customer service. The presence of such technology hasn’t caused ethical dilemmas before because these systems have always sounded automated, not human.” Whether or not that familiarity with automated systems has anything to do with respondents’ trust levels, some 57.4 percent said they still wouldn’t let a “robot” such as Duplex speak on their behalf (42.6 percent said they would). And around 58.5 percent said they would communicate differently if they realized they were speaking to an automated system on the phone (versus 41.5 percent who said they wouldn’t). Those numbers could reflect users’ unfamiliarity with this new technology—would you let a new and relatively untested system make important calls for you? It could also hint at a broader uneasiness about the A.I. platforms beginning to edge into our lives; we like the convenience of sophisticated software, but we’re not totally willing to give up control. Around a third of respondents thinking Duplex is unethical might technically be a minority, but it’s still a stunningly high percentage if Google wants to make the technology ubiquitous. Duplex, of course, isn’t the only example of an A.I. platform designed to make customer interactions more seamless. For the past few years, Facebook and other tech giants have promoted the use of bots, automated systems that can interact with users via text. As with Duplex, these bots rely very heavily on scripts, and don’t deal well with the unexpected. But as these platforms become more sophisticated, the line between machine and human will begin to blur, at least in certain contexts—and at that point, A.I. researchers and developers might face some very thorny ethical questions.